By 2024, it will be almost trivial to create realistic AI-generated images of people, leading to fears over how these deceptive images might be detected. Researchers at the University of Hull recently unveiled a new method for detecting AI-generated deepfake images by analysing reflections in human eyes. The technique, presented last week at the Royal Astronomical Society’s National Astronomy Meeting, adapts tools astronomers use to study galaxies to probe the consistency of light reflecting off eyeballs.

Adejumoke Owolabi, an MSc student at the University of Hull, led the research under the supervision of Dr Kevin Pimbblet, Professor of Astrophysics.

Their detection technique is based on a simple principle: a pair of eyes illuminated by the same set of light sources will typically have a similarly shaped set of light reflections in each eyeball. Many AI-generated images created to date do not account for eyeball reflections, so the simulated light reflections are often inconsistent between each eye.

In some ways, the astronomical angle isn’t always necessary for this kind of deepfake detection, since a quick glance at a pair of eyes in a photo can reveal inconsistencies in the reflection, something that artists who paint portraits must account for. But applying astronomical tools to automatically measure and quantify eye reflections in deepfakes is a new development.

Automated detection

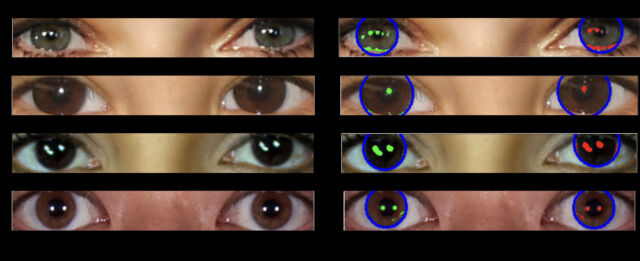

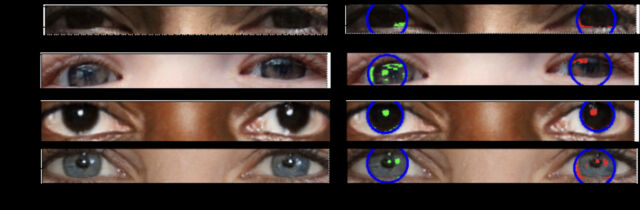

In a blog post for the Royal Astronomical Society, Pimbblet explained that Owolabi had developed a technique to automatically detect eyeball reflections and ran the morphological features of the reflections through indices to compare the similarity between left and right eyeballs. Their findings revealed that deepfakes often show differences between the pair of eyes.

The team applied methods from astronomy to quantify and compare eyeball reflections. They used the Gini coefficient, which is commonly used to measure the distribution of light in galaxy images, to assess the uniformity of reflections across eye pixels. A Gini value closer to 0 indicates evenly distributed light, while a value approaching 1 suggests concentrated light in a single pixel.

In the Royal Astronomical Society release, Pimbblet drew comparisons between how they measured the shape of the eyeball’s reflection and how they typically measure the shape of galaxies in telescope images: “To measure the shapes of galaxies, we analyse whether they are centrally compact, whether they are symmetric, and how smooth they are. We analyse the light distribution.”

The researchers also investigated the use of CAS parameters (concentration, asymmetry, smoothness), another tool from astronomy to measure the galactic light distribution. However, this method proved less effective in identifying fake eyes.

An arms race in detection

While the eye reflection technique offers a potential path for detecting AI-generated images, the method may not work if AI models evolve to capture physically accurate eye reflections, potentially applied as a next step after image generation. The technique also requires a clear, close-up image of eyeballs to work.

The approach also risks producing false positives, as even authentic photos can sometimes show inconsistent eye reflections due to different lighting conditions or post-processing techniques. But analyzing eye reflections can still be a useful tool in a larger deepfake detection toolset that also takes into account other factors such as hair texture, anatomy, skin details, and background consistency.

While the technique shows promise in the short term, Dr Pimbblet cautioned that it is not perfect. “There are false positives and false negatives; it’s not going to catch everything,” he told the Royal Astronomical Society. “But this method gives us a baseline, a plan of attack, in the arms race to detect deepfakes.”